In an era of deepfakes, AI avatars, and algorithmic manipulation, trust in video is under siege. Digital feeds are flooded with synthetic clips that look real. They blur the line between genuine user-generated and AI-generated video.

For CMOs, content marketers, and social media leads, this is more than a tech trend. It is a call to action around AI content disclosure. My stance is simple: every AI-generated video should be labeled on every platform, and user-generated content must come from real humans. I will cover why this protects consumer trust, why authentic UGC matters, what the opossum deepfake taught us, and what marketing ethics for AI should look like now.

Key Takeaways:

- Label Every AI Video: AI-generated video content should be clearly labeled as such across the web, TV, social media, and ads to maintain transparency and trust.

- UGC Means Human: Authentic user-generated video comes from real people, not algorithms. This is a crucial distinction for honesty and cultural integrity.

- Deepfake Opossum Lesson: A viral AI-generated video of an opossum “playing dead” in a mirror (an anatomically false behavior) shows how unmarked fakes can mislead viewers and distort reality.

- AI’s Powerful but Limited Role: AI delivers life-changing benefits in research, accessibility, and productivity, but when it comes to human stories and brand trust, genuine human authenticity matters most.

- Marketing’s Responsibility: As campaigns blend AI and real content, marketers must be transparent and ethical, ensuring that audiences know what is real and what is synthetic.

- BrandLens Solution: BrandLens serves as a verified human content pipeline. Collecting videos with secure timestamps, identity verification, participant consent, and human-led prompts so brands get authentic UGC they (and their audiences) can trust.

The Flood of AI-Generated Videos and the Erosion of Trust

Why AI-Generated Video Is Hard to Spot

The rise of AI-generated video is creating a new trust problem in digital media. Deepfakes can swap faces, synthesize voices, and generate very realistic scenes. That weakens the long-held assumption that seeing is believing.

Most people struggle to tell an AI-made video from real footage. In one survey of 2,000 consumers, only 0.1% correctly identified every deepfake vs real clip, even after being warned. People also found deepfake videos harder to spot than images. They missed fake videos 36% more often, even when they felt confident.

What This Means for Marketers

Why does this matter for marketers? Trust is the currency of engagement. If audiences can’t trust what they see on your channels, every video becomes suspect. Consumer skepticism is on the rise, especially among digital natives. Pew research shows younger adults now trust social media content almost as much as traditional news, yet 86% of consumers still say they gravitate toward brands that feel “authentic and honest” online. In other words, people want authenticity, but they’re swimming in a sea of perfect forgeries. This “trust-whiplash” means brands that double down on real, verifiable human content can stand out as beacons of honesty.

Mandating Transparency (EU, US): Label All AI-Generated Video Content

Regulation Is Forcing AI Content Disclosure

It’s time for the industry to draw a hard line: every AI-generated video must be clearly labeled as artificial. Whether it’s a manipulated political clip on TV, a synthetic spokesperson in an ad, or a deepfake influencer on Instagram, viewers deserve an upfront disclaimer. Regulators worldwide are moving in this direction. The European Union’s AI Act explicitly mandates clear labeling of deepfake and AI-generated content at first exposure. Article 50 of the EU AI Act imposes transparency obligations so that any AI-manipulated video or image is disclosed as “artificially generated,” in terms that users can’t miss. This means a brand or creator deploying a synthetic video in Europe must flag it as AI-made from the start, or risk penalties under law.

The United States Federal Trade Commission (FTC) is also cracking down. In August 2024, the FTC finalized a new rule banning testimonials “by someone who does not exist,” enabling fines up to $51,744 per violation for any undisclosed synthetic endorsements. If a company uses an AI-generated “customer” or a fictional avatar without disclosure, the FTC can treat it as deception.

As I noted in a previous BrandLens blog post, the updated FTC Endorsement Guides expanded the definition of an “endorser” to include virtual influencers and other computer-generated personas. The message is clear. AI content cannot masquerade as human. If it’s not real, you must tell the audience. The FTC’s guidance on fake reviews also prohibits any review or testimonial created to mislead consumers, including those generated by artificial intelligence.

Why Labels Build Trust

These regulatory moves aren’t red tape for its own sake. They act as guardrails that preserve trust in an age of AI. Labeling AI videos supports honesty and consent. Viewers deserve to know when a clip is synthetic.

When platforms clearly tag AI-generated media, they set a baseline expectation that brands will not deceive audiences. Some brands now treat AI content disclosure as a trust signal. One industry analysis notes that more companies are moving from hiding AI usage to announcing it openly as a badge of transparency. Labeling AI videos is becoming a best practice for ethical marketing.

Per our estimation, 95% of AI videos slip through unlabeled. Detection tools and platform policies are struggling to keep up. Meta’s own documentation shows its automated AI content labels currently apply only to still images. That leaves video and audio with fewer built-in guardrails.

Deepfake detectors can also fail after simple edits. A 2025 benchmark found that one round of post-processing dropped detection accuracy to 52%. As long as platforms cannot reliably catch every fake, the onus is on brands and creators. Default to clear identification. If you use an AI avatar, say so. If a story is synthetic, flag it. In a world of convincing fakes, clarity is the safer strategy.

UGC Means Human: Why Authentic User-Generated Video Can’t Be AI

When AI-Generated Video Is Misrepresented as UGC

Equally important is protecting the integrity of user-generated content (UGC). By definition, UGC videos are created by real humans sharing genuine experiences and not by AI algorithms. This distinction may seem obvious, but it’s becoming blurred as many marketers attempt to pass off polished AI clips as “organic” content. I argue emphatically that the moment AI enters the picture, it ceases to be true UGC. An AI-generated video might mimic the style of a TikTok testimonial or a customer review, but it is fundamentally not the voice of a customer. Calling it “UGC” is not only incorrect but also a breach of trust with your audience and community.

Once UGC becomes synthetic, it stops being user-generated.

Authenticity is the whole appeal of UGC. People trust UGC because it’s real: real opinions, real faces, real creativity from users, not perfected corporate messaging. Polls consistently show that consumers place higher trust in user-generated videos and reviews than in brand-produced ads. But if brands dilute UGC with fakes, that trust evaporates. As our own CEO at BrandLens, Vahag Karayan, put it in a recent LA Examiner feature: “AI-generated video isn’t user-generated content. Real UGC comes from people sharing genuine stories, with AI only supporting the process.” In other words, the role of AI in UGC should be limited to facilitation (like helping edit videos or moderate content), never creating the content or persona itself. The human must remain at the core.

How Synthetic UGC Erodes Culture and Trust

Insisting on human-only UGC is also about cultural integrity. User-generated videos have become a cultural force, from viral challenges and unboxing videos to citizen journalism. These real voices and perspectives shape trends and public discourse. If we start flooding UGC channels with AI simulations, we risk warping culture with a flood of synthetic voices that have no lived experience. It’s the digital equivalent of astroturfing. Imagine a “grassroots” social media trend that was actually sparked by dozens of AI-created clips seeded by a bot network. It undermines the very concept of community-driven content. To keep our online culture honest and human, we must preserve spaces for authentic human expression.

User-generated must remain user-generated!

Why This Matters for Compliance

From a regulatory standpoint, misrepresenting AI content as “user-generated” could also run afoul of truth-in-advertising rules. The FTC’s endorsement guidelines require transparency about the source of endorsements. A virtual (AI) persona can count as an endorser, and if that fact is hidden, it’s deceptive. The U.S. regulator has already warned that AI-generated “consumer” reviews or testimonials are illegal if undisclosed. The spirit of these rules is clear: you can’t fake a consumer’s voice. Brands that rely on verified customer footage stay compliant and stand apart in a crowded feed of simulations. Ultimately, UGC’s power comes from humanity: the quirks, emotions, and real-life contexts that no AI can truly replicate. Companies that respect and elevate real customer voices will win deeper loyalty than those that try to automate relatability.

When AI Content Crosses the Line: The Opossum Deepfake Example

A Fast Lesson in How AI-Generated Video Misleads

Nothing illustrates the dangers of unlabeled AI-generated video better than a recent viral deepfake involving an opossum. The clip in question showed a video of an opossum encountering a mirror at night, then dramatically “playing dead” at the sight of its own reflection. The video spread like wildfire on social media, especially among kids and animal lovers, because it was funny and seemingly real. An opossum apparently got so startled by the mirror image that it toppled over in a cartoonish faint.

The problem? Opossums don’t actually behave that way. Wildlife experts and sharp-eyed viewers flagged the video as AI-generated. In reality, when opossums use their “playing dead” defense, the process is slow and involuntary.

They do not see themselves and do a theatrical trust-fall on cue. A real opossum under threat might freeze, hiss, and slump onto its side. Opossums rarely “play dead” unless they face a serious predator, and they would not bother with a mirror. The quick flip and odd mirror reflection gave it away.

Why Unlabeled AI-Generated Video Warps Reality

Why does a silly opossum video matter? Millions of people, including children, saw it and learned the wrong lesson about nature. As one Reddit user lamented after the hoax was revealed, “we’re not even 10 years into AI videos and this stuff is only going to get harder [to spot]. I wonder how children growing up with video not being able to be trusted will affect their view of the world?”.

Many viewers will now carry a false idea of animal behavior. That is the point: once unlabeled synthetic clips feel normal, the same confusion scales to higher stakes. Today it’s an opossum. Tomorrow, it could be a deepfaked video of a public figure or an event that never happened. Unlabeled AI content pollutes the collective understanding of reality, whether it is animal behavior, personal reputations, or public history.

The opossum deepfake serves as a vivid cautionary tale for marketers and content creators. If a harmless-looking viral video can distort reality and erode trust, imagine the damage from an AI-generated testimonial or “real” customer story that people take at face value. The risk isn’t just academic. We’re already seeing instances of AI-generated “UGC” ads (e.g. fake video reviews with AI avatars) being used in marketing. If these aren’t clearly marked, consumers feel duped once the truth comes out. The backlash can be severe, from public outcry to regulatory enforcement.

The lesson is simple: don’t blur the line. If it’s simulated, make that clear from the start. The short-term engagement boost of a convincing fake isn’t worth the long-term hit to trust when your audience discovers the deception.

AI’s Incredible Benefits and When Human Authenticity Matters Most

Where AI Helps and Where It Hurts Trust

To be clear, taking a hard line on labeling and limiting AI in certain content is not an anti-AI stance. I acknowledge that artificial intelligence is a powerful, life-changing force for good in many domains. In fact, the same technologies that create deepfakes are also driving breakthroughs in medicine, accessibility, and productivity at work. AI’s impact on research is nothing short of revolutionary.

Where AI Delivers Real Benefits

Consider DeepMind’s AlphaFold. It predicted the 3D structures of over 200 million proteins, accelerating biological research and drug discovery. That is a real win. It potentially saved “hundreds of millions of years” of research time and is accelerating drug discovery and biological research worldwide.

AI also improves accessibility. Computer vision can describe images for blind users, and apps can generate real-time captions for deaf users. In productivity, studies found AI assistance can boost employee productivity by 24–25% on certain tasks, especially for less experienced workers.

These are profound positives: curing diseases, empowering individuals, and making our work lives more efficient.

Where Marketers Should Draw the Line

So where do we draw the line? Context matters. When authenticity, accountability, and emotional trust matter most, human-generated content should lead.

AI can help with editing, captions, or b-roll. But we should move carefully when content touches identity, credibility, or lived experience. For example, the use of AI to simulate a doctor’s voice to deliver information is unacceptable without proper disclosure and explanation. That would be a serious breach of trust. Similarly, an AI news anchor can read headlines, but a deepfake of a real journalist conveying “their” opinion crosses an ethical line.

Authenticity matters most when the message’s impact relies on human qualities, such as empathy, honesty, lived experience, personal expertise, or creative expression. These are things an AI, no matter how advanced, does not truly possess.

Setting the boundaries

From a marketing point of view, use AI as a tool, not a mask. AI can streamline editing, generate captions, and support localization. That is helpful, as long as you practice AI content disclosure.

Do not use AI to fake a human connection. The emotional resonance of a customer story, an employee behind-the-scenes clip, or a fan testimonial comes from real people. That is why authentic UGC matters more as AI-generated video gets easier to produce. Most people still favor brands that come across as “authentic & honest”. Authenticity has become a premium in content. As perfect fakes multiply, genuine human stories and faces become even more impactful because they risk something real and thus earn trust.

The boundary is straightforward: use AI to assist creators, not impersonate them. This is marketing ethics for AI in practice.

Marketer’s Playbook in an AI+UGC World

- Synthetic video must be clearly identified in all customer-facing campaigns.

- Real customer footage should outweigh artificial substitutes to protect trust.

- Marketing teams need internal AI ethics guidelines to prevent misuse and reputational risk.

- Industry standards and provenance tools will define the future of AI-generated video transparency.

- Trust is an operational responsibility.

The marketing world now sits at the intersection of AI and UGC, and the way forward demands responsibility and transparency. As more campaigns blend AI-generated elements with real user content, brand leaders must establish guidelines to uphold trust every step of the way. The worst-case scenario is a “wild west” where anything goes. Where brands pump out convincing AI-generated influencer videos or customer reviews for quick gains. That short-sighted approach will inevitably backfire. Regulators are already on alert, and consumers will unleash backlash when they feel tricked. The only sustainable path is ethical integration of AI, which means being honest about what’s real and what’s synthetic in your campaigns.

That is the core of marketing ethics for AI.

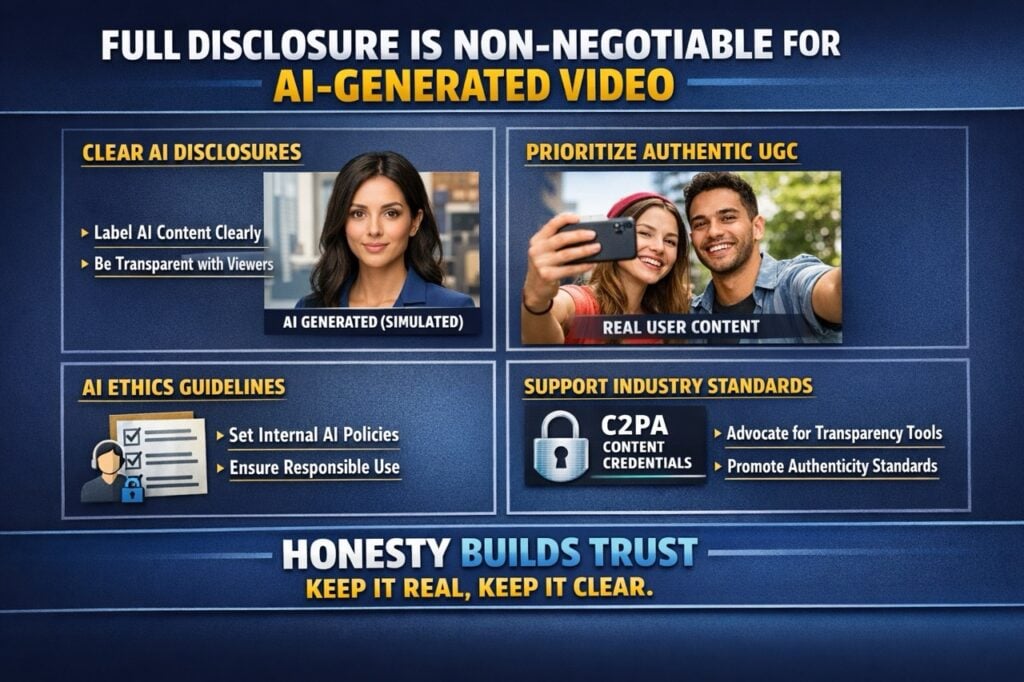

Full Disclosure Is Non-Negotiable for AI-Generated Video

So what does responsible practice look like? First, full disclosure whenever AI content is used in customer-facing communications. If your marketing team uses an AI avatar to represent a persona, introduce it clearly as a virtual character. If you are augmenting real footage with AI-generated scenes, label those scenes. It can be as simple as an on-screen label “(simulated)” during an AI segment of a video, or a caption in a social post. The FTC suggests that disclosure in videos should be clear and conspicuous, visible for the entire duration of the AI content, not hidden in fine print. Proactively labeling AI elements isn’t a weakness. It shows your brand has nothing to hide. In fact, it may become a selling point: a recent piece in TechDogs noted that many brands in 2026 are leaning into AI labeling as a trust signal to audiences, essentially saying “we use AI tools, and we’re proud to tell you when we do.”

Prioritizing Authentic UGC Over AI-Generated Video

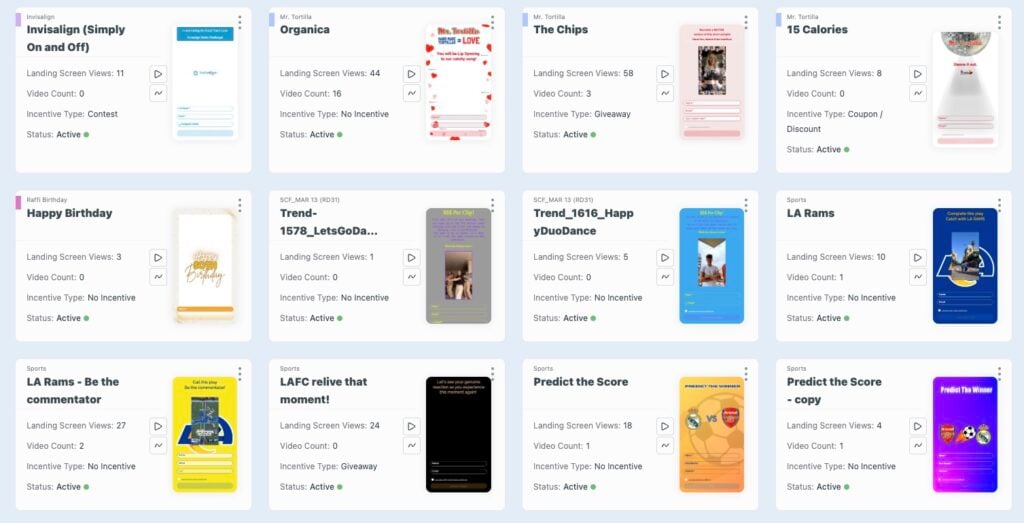

Second, elevate real UGC even more in your strategy. In a world where some competitors might cut corners with AI, doubling down on genuine user content can differentiate you. Encourage your actual customers, fans, and employees to share their stories on video. Invest in programs that make it easy for them to do so (for example, via UGC platforms like BrandLens). This not only yields authentic content but builds a community. When people see their peers championing a brand, it’s far more persuasive than the slickest AI-generated ad. Data backs this up: authentic user-generated videos often deliver engagement rates up to 4x higher than traditional polished ads. People simply connect more with relatable, human stories. By prioritizing authentic user-generated content (UGC), you’re also mitigating the risk posed by the deepfake crisis. This ensures your brand’s content repository is filled with verifiable, timestamped human moments that no competitor’s deepfake army can replicate.

Building Internal AI-Generated Video Ethics Guidelines

Third, implement internal guidelines for AI ethics in content creation. Just as companies have social media policies or branding guidelines, it’s time to have an “AI content ethics” guideline. This might include rules like: never impersonate a real person using AI; always obtain consent if using someone’s likeness with AI; never fabricate customer quotes or reviews; always label AI visuals; and maintain logs of what was AI-generated for accountability. Training your marketing teams and agencies on these principles is crucial. The goal is to create a culture where the default question for any new piece of content is: “Was any part of this synthetically generated, and if so, have we been transparent about it?” By baking that into your review process, you’ll catch potential issues before they go live.

Supporting Industry Standards for AI-Generated Video Transparency

Finally, as a marketer, advocate for industry standards and tools that support authenticity. Support efforts like the Content Authenticity Initiative and C2PA, which are developing technical standards for embedding provenance metadata into media. In fact, a coalition of governments and tech companies (including the NSA and others) recently endorsed C2PA’s Content Credentials framework to attach cryptographic proof of origin to images and video frames. This is on track to become an ISO standard by 2026, meaning future devices and platforms might automatically detect if a video has an authenticity certificate or not. Marketers have a responsibility not just to avoid deception, but to actively contribute to solutions that distinguish real from fake. After all, if consumers lose faith in the medium of video itself, every honest brand storyteller suffers. We must collectively protect the credibility of our craft.

BrandLens: A Pipeline for Verified Human Video Content

A Practical Path to Authentic UGC

Sourcing real video content at scale has become harder in an AI saturated world. This is where BrandLens comes in. Our platform is inherently designed to guarantee that all collected content is human-generated when shot through our camera. BrandLens is built as a controlled capture pipeline where every video is created live by a person, inside guided templates, and delivered directly to brands. Instead of trying to detect authenticity after the fact, the platform is designed to produce human content by default.

- Live Human Capture by Design: Every video is recorded live inside the BrandLens camera. No uploads. No generated clips.

- AI Assisted Prompts, Human Performance: Campaign prompts can be refined with AI or creative tools to improve clarity and storytelling. The structure may be optimized, but the video itself is always performed by a human.

- Direct Secure Submission Flow: Content moves straight from the user’s device into the BrandLens system via a link or QR code. There is no app to install, no file handling, and no content injection.

- Moderation Without Content Generation: AI can support filtering, organization, and review workflows. It never alters or creates video, keeping every submission fully human.

- Authenticity at Scale: Brands can run large campaigns while maintaining real human content across hundreds or thousands of submissions. The result is a trusted library of genuine video ready for use across marketing and experiences.

How Brands Operationalize Authentic UGC

In short, BrandLens gives you control, speed, and authenticity all at once. It’s a way to embrace the real power of UGC (community trust and engagement) without falling into the trap of synthetic shortcuts. Every video collected is an asset that can build long-term loyalty because viewers can sense it’s legitimate. And as regulations like the FTC rules evolve, using BrandLens helps ensure you’re always on the right side. Our platform includes features like on-screen disclosure cues or template overlays if needed, so that any required labeling or permission is baked in. We view ourselves as partners in ethical, human-centered marketing. By combining human creativity with smart technology (for workflow, not content generation), BrandLens delivers what we like to call a “trust pipeline” for brand video.

Conclusion: Human Authenticity is Your Brand’s Most Valuable Asset

The Trust will become the ultimate differentiator

For CMOs and brand leaders, the mandate is clear: treat verified human video as your most valuable brand asset. Authentic videos created by your real customers, employees, and fans are not just content. They are trust incarnate, the digital social proof that your brand is what it claims to be. No amount of AI glitz can replicate the credibility of a genuine human story on camera. By clearly distinguishing synthetic content from customer stories, you protect your brand’s credibility and earn long-term trust.

Building Trust Through Authentic Video Creation

Now is the time to lead on this issue. Establish strict guidelines for your teams and agencies around AI content. Educate your audience that your brand values honesty, perhaps even make it part of your messaging that “all our testimonials are 100% human, never AI.” Consumers are hungry for honesty amid the chaos; they will reward brands that provide it. And if you need a partner to scale up authentic video creation, consider what BrandLens offers as a platform purpose-built for this authenticity challenge. We can help you source and share real human videos with confidence, backed by verification and consent at every step.

Embrace Authenticity in Marketing

Don’t wait for a scandal or regulation to force your hand. Embrace the authenticity imperative now. In a world increasingly saturated with deepfakes and synthetic influencers, your commitment to real human content can set you apart. Let your competitors chase the latest AI quick fix while you double down on trust. In the end, marketing is a people business and those who remember that will earn lasting loyalty. Choose human and authenticity. Your audience will thank you for it.

Ready to amplify your marketing with genuine human stories? Start by leveraging your greatest asset, your real customers and community. If you’re looking for a streamlined way to collect authentic video testimonials, stories, and UGC at scale with all the verification and compliance built in, schedule a Demo call with us.

Let’s build the future of marketing on truth, together.